(By Alessandra Briganti)

So far there has been no fake news

'apocalypse' in the artificial intelligence era, no

'AI-pocalypse Now'.

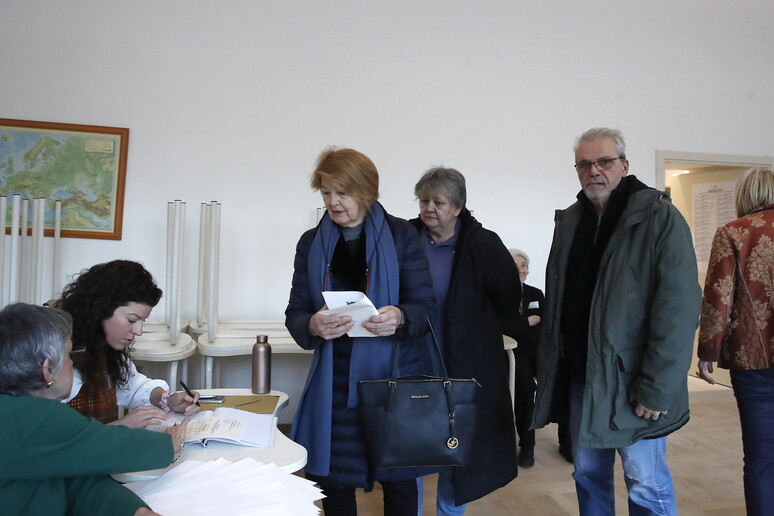

The "super election year" 2024 did not see a tsunami of

disinformation pushed by artificial intelligence that many

analysts had feared, according to a study by the Munich Security

Conference.

It said that, although artificial intelligence aggravates the

threat of disinformation operations by providing more powerful

instruments with which to create and spread fake news, its

impact so far has been "negligible".

The World Economic Forum had ranked "AI-generated misinformation

and disinformation" as one of the main threats to global

stability in 2024, when votes were cast in more than 60

countries accounting for over half the world's population.

Yet the atomic bomb of fake news created by AI did not go off.

According to the experts, AI-enabled tactics in disinformation

campaigns were both less prevalent and less impactful than

expected.

"The few salient cases included French far-right campaigners

using some AI-generated images, for instance depicting migrants

arriving on France's shores," the study said.

"Similar right-wing GenAI imagery was used in the EU elections.

In the UK, GenAI content only went 'viral' in a handful of

cases".

So the nightmare scenarios forecast by some analysts did not

materialize and there were several reasons for this, according

to the Munich Security Conference.

The first regards government interventions and tech companies'

efforts to limit the spread of deceptive content.

Second, campaigning industry chiefs may also be delaying the

adoption of AI, in the US for example, fearing potential

reputational costs.

Furthermore, the use of AI disinformation may not influence

voting habits in a significant way as most voters hold firm

voting preferences regardless of new information, real or

fabricated.

The final factor the experts highlighted is the level of

sophistication of tactics to manipulate contents with AI and

spread them. So far actors have favoured conventional methods

that they are already highly practiced in.

The report said that this year the "atomic bomb" of AI

disinformation may not have detonated, but the fuse has been lit

and they warned against lowering the guard against worrying new

trends regarding the potential impact of AI on democracy.

First of all, AI tools are rapidly becoming more sophisticated.

Pervasive AI content is already making it more difficult for

citizens to sift through information online with the risk of the

public becoming disengaged from political news.

ALL RIGHTS RESERVED © Copyright ANSA